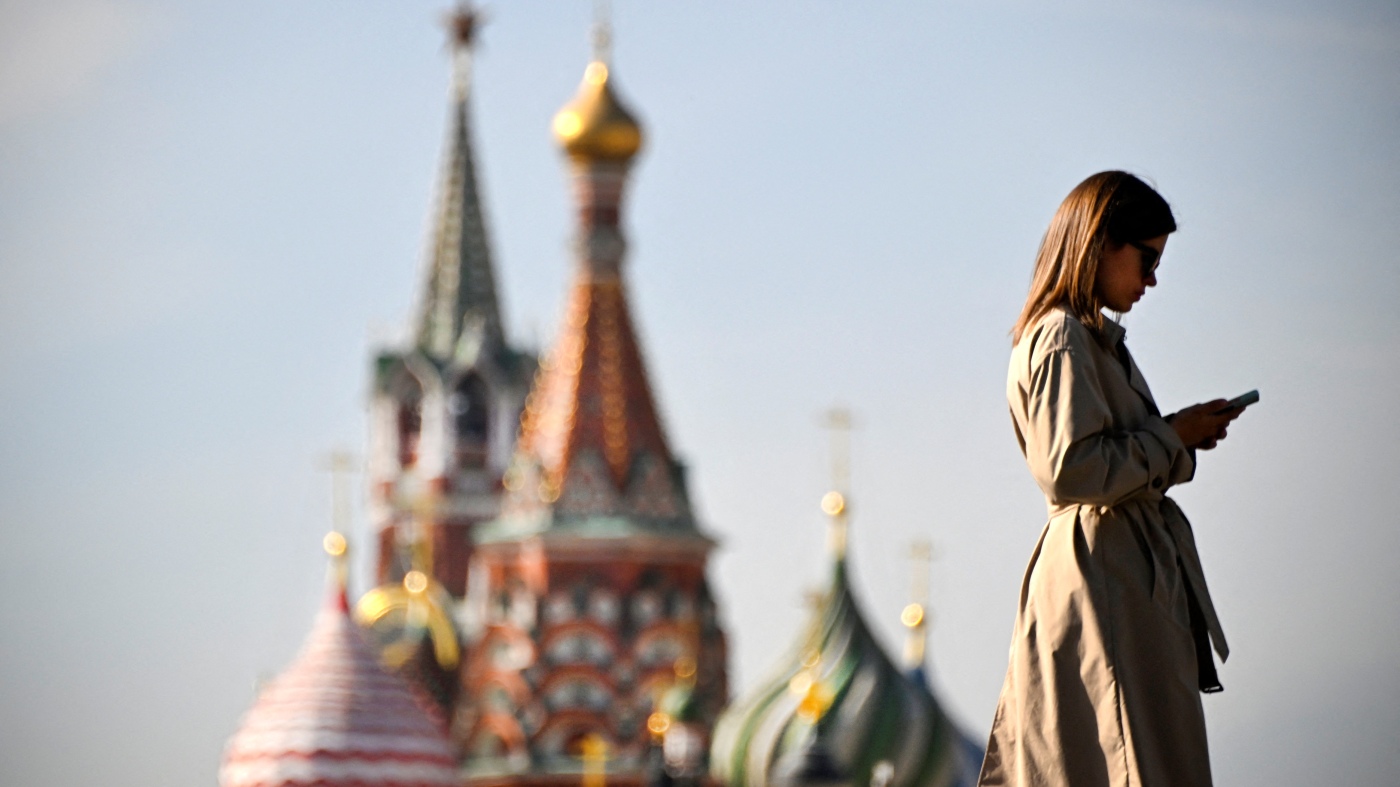

A woman walks in front of the Kremlin on September 23, 2024. U.S. intelligence officials say Russia is deploying artificial intelligence tools to try to sway American voters ahead of the November election.

Alexander Nemenov/AFP via Getty Images/AFP

Hide caption

Toggle caption

Alexander Nemenov/AFP via Getty Images/AFP

Russia is the most active foreign influencer using artificial intelligence to generate content targeting the 2024 presidential election, U.S. intelligence officials say. said on monday.

An official from the Office of the Director of National Intelligence, who spoke to reporters on condition of anonymity, said the cutting-edge technology is making it easier for Russia and Iran to quickly and persuasively produce often polarizing content to sway American voters.

” [intelligence community] “The United States views AI as an enabler of malign influence, but not yet as a revolutionary influence tool,” the official said. “In other words, information operations are the threat, and AI is an enabler.”

Intelligence officials have said they’ve seen AI used in foreign elections before. “Today’s update makes it clear that this is now happening here,” the ODNI official said.

Russian influence operations have spread synthetic images, videos, audio and text online, the officials said, including AI-generated content “about prominent American figures” and content that seeks to highlight divisive issues such as immigration, which they said is consistent with the Kremlin’s broader goals of promoting former President Donald Trump and disparaging Vice President Kamala Harris.

But Russia has also used lower-tech methods: ODNI officials said Russian influence figures faked a video of a woman claiming to be the victim of a hit-and-run by Harris in 2011. No evidence Microsoft also said last week that Russia was behind the video, which was spread by a website purporting to be from a fictitious San Francisco television station.

ODNI officials also said Russia was behind the doctored video of Harris’ speech, which may have been altered using editing tools or AI and was then shared on social media and elsewhere.

“One of the efforts by Russian actors is to create this media in an effort to encourage its proliferation,” the ODNI official said.

The official said Harris’ video was altered in a variety of ways to “paint a negative light on her personally and relative to her opponent” and to focus on issues that Russia considers divisive.

Iranian officials say the country is also using AI to generate social media posts and write fake articles on websites posing as legitimate news organizations, and intelligence agencies say Iran is trying to undermine President Trump in the 2024 elections.

Iranian officials say they are using AI to create such content, in both English and Spanish, and that it targets Americans “on polarizing issues across the political spectrum,” including the Gaza war and presidential candidates.

China, the third-ranked foreign threat to U.S. elections, is using AI in a wide range of influence operations aimed at shaping global perceptions of China and amplifying divisive issues in the U.S., such as drug use, immigration and abortion, officials said.

But officials said they had not seen any AI-based operations targeting voting results in the U.S. Intelligence agencies have said Beijing’s influence operations are focused on lower-level elections rather than the U.S. presidential election.

U.S. officials, lawmakers, tech companies and researchers worry that AI-based manipulation, such as deepfake video or audio that makes candidates appear to do or say things they did not, or audio designed to mislead voters about the voting process, could upend this year’s election campaign.

These threats could become reality as Election Day approaches, but so far, AI is being used more frequently in a variety of ways, including by foreign adversaries to increase productivity and boost volume, and by political supporters to generate memes and jokes.

ODNI officials said Monday that foreign powers have been slow to overcome three main obstacles to AI-generated content posing a greater risk to U.S. elections: first, overcoming the guardrails built into many AI tools without being detected, second, developing their own sophisticated models, and third, strategically targeting and distributing AI content.

As Election Day approaches, intelligence communities will be on the lookout for foreign efforts to introduce deceptive or AI-generated content in a variety of ways, including “laundering material through prominent figures,” using fake social media accounts or websites posing as news organizations, or “publicating purported ‘leaks’ of AI-generated content deemed sensitive or controversial,” the ODNI report said.

Earlier this month, the Justice Department accused Russian state broadcaster RT, which the U.S. government says acts as an arm of Russian intelligence, of funneling nearly $10 million to pro-Trump American influencers who posted videos critical of Harris and Ukraine. The influencers said they had no idea the funds came from Russia.